Is this video spatial, or not?

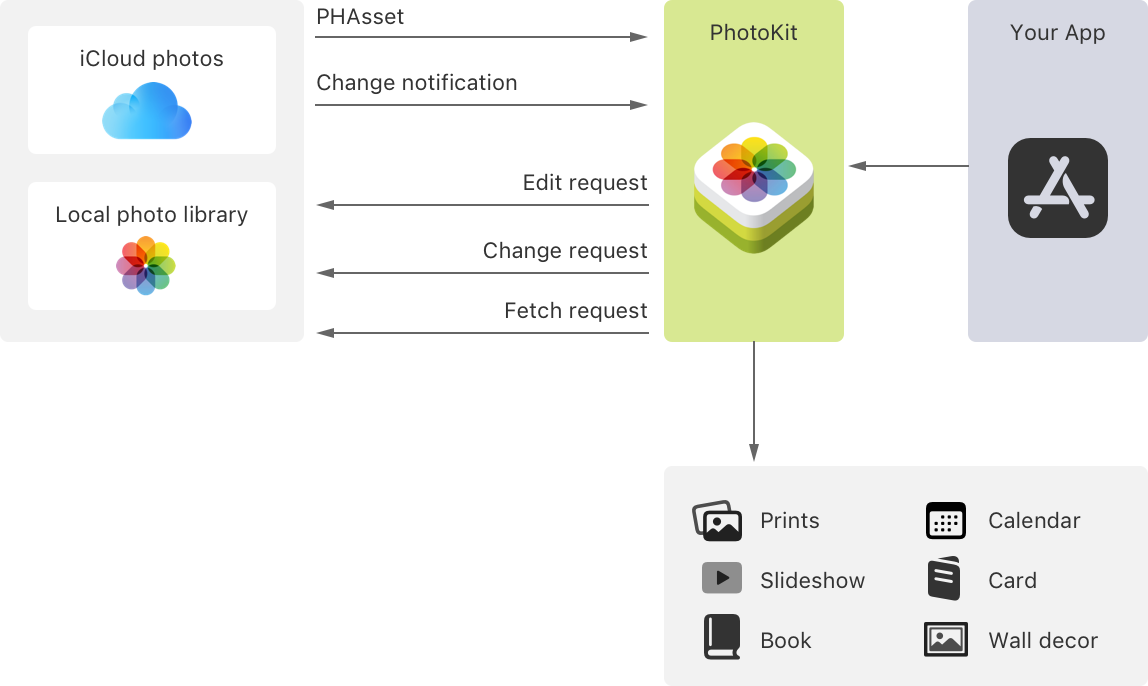

We have this feature in a visionOS app that allows users to select videos from their library using the PhotosPicker from PhotoKit. Overall, its implementation is simple and effective, especially given everything that is going on behind the scenes.

Once a video is selected, we wanted to avoid restricting the type of video (and now that I think about it, I'm unsure how to even accomplish that), but flexibility in selection comes with the need of a specialized treatment for spatial videos.

The process of using the picker involves creating a Transferable, managing URL permissions, and finally, playing the content. We expected to be able to identify the type of the video early on, so I turned straight to figure out how to extract metadata when importing during the TransferRepresentation step. To do this, one can simply build an AVAsset from the received URL to extract the video tracks

let tracks = try await AVAsset(url: url).loadTracks(withMediaType: .video)Then, each individual track can be queried by asking for its format descriptions

let descriptions = try await track.load(.formatDescriptions)A CMFormatDescription represents each returned description and includes a set of methods to inspect its contents.

Now, which one to use? Sure, the one that will provide the most certainty for our objective. So after some digging, the kVTDecompressionPropertyKey_RequestedMVHEVCVideoLayerIDs seemed to be just that as it indicates that playing that particular file requires the use of the MV (Multi View) component of the HEVC format, commonly used for stereoscopic video. Unfortunately, this value remains unavailable until buffering begins (at least on visionOS?), making it a no go for the requirement to determine types in advance.

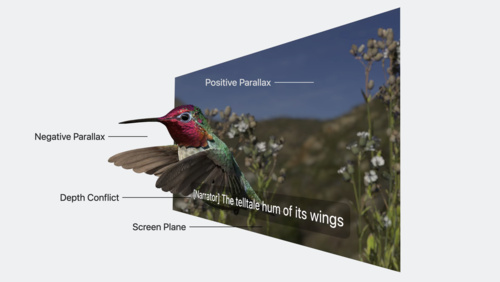

Screenshots from the Deliver video content for spatial experiences session, introducing and examining MV-HEVC

Another technique I've seen is to use CMFormatDescriptionGetExtensions and then check kCMFormatDescriptionExtension_HorizontalFieldOfView, which indicates the horizontal field of view in thousandths of a degree. A nonzero value for this key indicates that the video is spatial in theory.

Some outstanding projects that use this technique:

To bel honest, I believe this method should work on most cases as it has being clearly battle tested, but the absence of a straightforward API for identifying this trait still bothered me. Additionally, a superstitious part of me considers the possibility of videos with a wide horizontal FOV that may not be stereoscopic.

So, if you go again and analyze the results of CMFormatDescriptionGetExtensions after selecting a regular video and then a spatial one, you can see some interesting differences, and between those, I particularly believe the presence of HasLeftStereoEyeView and HasRightStereoEyeView in the video metadata could be more reliable indicators of spatial (stereoscopic) videos.

StereoCameraBaseline, HorizontalDisparityAdjustment and consider that all this properties could be combined for checking

Current status

Using the original strategy of collecting metadata before initiating any decoding sessions has proven to be a good fitting for us. It enables to inspect the video’s properties efficiently and make decisions without overhead which allows listing huge amounts of selected videos and group them by typology in lightweight and fast fashion. Furthermore, this approach offers interesting flexibility as it allows for the examination of diverse properties such as codec type, dimensions, and other format-specific extensions without the need for any additional setup. And finally, early decision-making simplifies the app modeling process by reducing the number of mutations required and complexities on tracking model properties.

The consistent identification of spatial videos compared to monoscopic ones using this metadata technique has proven effective, as demonstrated by the positive results of our initial tests, but we have to be aware that because of this API limitations, we could be confronted to edge-cases and therefore, this needs further testing… or embrace uncertainty 🖖